Posts Tagged ‘Artificial Intelligence’

So, you want systems to fit people?

Posted by: adonis49 on: January 15, 2024

The title might suggest that all political systems, invariably, try hard to fit citizens into their “ideological” system, with laws tailor-made to indirectly let the people fit into the system. This is a universal truth, since so far, we cannot find a single exception to the rule.

The article is more down to earth and related to how design of objects, interfaces and systems (software) that people and practitioners use cause harm, physical danger, frequent errors and mishaps.

“So far, it sounds that Human Factors in engineering is a vast field of knowledge and it could have many applications.” You are absolutely right, the profession is multidisciplinary.

Let us consider the problems that an excellent human factors designer has to cope with when he has to incorporate the human dimensions into his design and the body of knowledge he has to learn and incorporate in his practice:

First, there are no design drawings for people as traditional engineers are familiar with because the structure of human organisms is approximately delineated, and the mechanisms are imperfectly understood.

Second, people vastly differ in anthropometric dimensions, cognitive abilities, sensory capabilities, motor abilities, personalities, and attitudes; thus, the challenge of variability is different from physics where phenomena behave in countable fashions and can be accounted for in design.

Third, people change with time; they change in dimensions, abilities and skills as well as from moment to moment attributable to boredom, fatigue, lapse of attention, interactions among people and with the environment.

Fourth, the world is constantly changing, and systems are changing accordingly; thus interfaces for designing jobs, operations and environment have to be revisited frequently.

Fifth, contrary to the perception of people regarding the other traditional engineering fields, when we deal with human capabilities, limitations and behavior everyone feels is an expert on the basis of common sense acquired from living and specific experiences and we tend to generalize our feelings to all kinds of human behaviors.

For examples, we think that we have convictions concerning the effects of sleep, dreams, age, and fatigue; we believe that we are rather good judges of people’s motives, we have explanations for people’s good memories and abilities, and we have strong positions on the relative influence of nature and nurture in shaping people’s behavior.

Consequently, the expertise of human factors professionals is not viewed as based on science.

To be a competent ergonomics expert you need to take courses in many departments like Psychology, Physiology, Neurology, Marketing, Economy, Business, Management, and of course engineering.

You need to learn applied statistics, system’s modeling (mathematical and prototyping), the design of experiments, writing and validating questionnaires, collecting data on human performance, analyzing and interpreting data on the interactions of human with systems.

You need updating your knowledge continuously with all kinds of systems’ deficiencies that often hurt people in their daily lives, and learn the newer laws that govern the safety and health of the employees in their workplace.

All the above courses and disciplines that you are urged to take or to be conversant with have the well-being of targeted end users in mind.

To be an expert qualified designer you need:

To assimilate the physical and cognitive abilities of end users and what they are capable of doing best.

You need to discover their limitations as well so that you may reduce errors and foreseeable misuses of any product or interface that you have the responsibility to design.

You need to fit the product or interface to the users and avoid lengthy training or useless stretching of the human body in order to permit the users to efficiently manipulate your design.

An excellent designer has to know the advantages and limitations of the five senses and how to facilitate the interaction with systems under minimal stress, errors committed, and health complications generated from prolonged usage and repetitive movements of parts of the body.

I am glad, my newly found friend, that you are attentively listening to my lucubrations.

I would like it better if you ask me questions that prove to me that you are enthusiastic.

Could you enumerate a few incidents in your life that validate the importance of this field of study?

“Well, suppose that I enroll in that all-encompassing specialty, are there any esoteric and malignant courses that are impressed upon me?”

Unfortunately, as any university major and engineering included, many of the courses are discovered to be utterly useless once you find a job.

However, you have to bear the cross for 4 years in order to be awarded a miserly diploma. This diploma, strong with a string of grade of “A’s” will open the horizon for a new life, a life of a different set of worries and unhappiness.

I can tell you for sure that it is not how interesting are the courses but the discipline that you acquired in the process.

You need to start enjoying reading, every day for at least 5 hours, taking good care for the details in collecting data or measuring anything, learning to write everyday, meticulously and stubbornly, not missing a single course or session, giving your full concentration during class, taking notes and then reading your notes afterwards, coordinating the activities of your study groups, being a leader and a catalyst for all your class associates.

You need to wake up full of zest and partying hard after a good week of work and study, staying away, like the plague, from those exorbitantly expensive restaurants and dancing bars because they are the haven of all those boring, mindless and useless people who are dependent completely on their parents.

Well, you will hear, frequently, that securing a University diploma is a testing ground for your endurance to accepting all kinds of nonsense. It is.

Most importantly, it is testing the endurance of your folks who are paying dearly for that nonsense.

Note: This article of 2005 is re-edited. It is an article from the category “Human Factors in Engineering“, a branch of Industrial Engineering for graduate students.

What’s that concept of Human factors in Design?

Posted on September 20, 2008 (written from 2003-2006)

What is this Human Factors profession?

Summary of Articles numbers

1. “What is your job?”

2. “Sorry, you said Human Factors in Engineering?”

3. “So, you want systems to fit people?”

4. “The rights of the beast of burden; like a donkey?”

5. “Who could afford to hire Human Factors engineers?”

6. “In peace time, why and how often are Human Factors hired?

7. “What message should the Human Factors profession transmit?”

8. “What do you design again?”

9. “Besides displays and controls, what other interfaces do you design?”

10. “How Human Factors gets involved in the Safety and Health of end users?”

11. “What kind of methods will I have to manipulate and start worrying about?”

12. “What are the error taxonomies in Human Factors?”

13. “What are the task taxonomies and how basic are they in HF?”

14. “How useful are taxonomies of methods?”

15. “Are occupational safety and health standards and regulations of any concern for the HF professionals?”

16. “Are there any major cross over between HF and safety engineering?”

17. “Tell us about a few of your teaching methods and anecdotes”

18. “What this general course in Human Factors covers?”

19. “Could one general course in Human Factors make a dent in a career behavior?”

20. “How would you like to fit Human Factors in the engineering curriculum?”

21. “How to restructure engineering curriculum to respond to end users demands?”

22. “How can a class assimilate a course material of 1000 pages?”

23. “What undergraduate students care about university courses?”

24. “Students’ feedback on my teaching method”

25. “My pet project for undergraduate engineering curriculum”

26. “Guess what my job is”

27. “Do you know what your folk’s jobs are?”

28. “How do you perceive the inspection job to mean?”

29. “How objective and scientific is a research?”

30. “How objective and scientific are experiments?”

31. “A seminar on a multidisciplinary view of design”

32. “Consumer Product Liability Engineering”

33. “How could you tell long and good stories from HF graphs?”

34. “What message has the Human Factors profession been sending?”

35. “Who should be in charge of workspace design?”

36. “Efficiency of the human body structure and mind”

37. “Psycho-physical method”

38. “Human factors performance criteria”

39. “Fundamentals of controlled experimentation methods”

40. “Experimentation: natural sciences versus people’s behavior sciences”

41. “What do Human Factors measure?”

42. “New semester, new approach to teaching the course”

43. “Controlled experimentation versus Evaluation and Testing methods”

44. “Phases in the process of system/mission analyses”

45. “Main errors and mistakes in controlled experimentations”

46. “Human Factors versus Industrial, Computer, and traditional engineering”

47. “How Human Factors are considered at the NASA jet propulsion laboratory”

48. “Efficiency of the human cognitive power or mind”

49. “Human Factors versus Artificial Intelligence”

50. Computational Rationality in Artificial Intelligence

51. “Basic Engineering and Physics Problems Transformed Mathematically”

52. Mathematics: a unifying abstraction for Engineering and Physics

53. How to optimize human potentials in businesses for profit

Can humanity ever suffer a world of Artificial Intelligence that lacks a sense of humor and deliver sweet sarcasm?

Posted by: adonis49 on: December 31, 2020

Is sarcasm such a problem in artificial intelligence research

Posted on March 1, 2016

Automatic Sarcasm Detection: A Survey

[PDF] outlines ten years of research efforts from groups interested in detecting sarcasm in online sources.

If a text is devoid of detailed context to the story, there is no way to detect a sense of humor. And the major problem is that most stories or documentary pieces do Not bother to provide substantive context that are Not based on biases.

“Any computer which could reliably perform this kind of filtering could be argued to have developed a sense of humor.”

Martin Anderson Thu 11 Feb 2016

The problem is not an abstract one, nor does it centre around the need for computers to entertain or amuse humans, but rather the need to recognise that sarcasm in online comments, tweets and other internet material should Not be interpreted as sincere opinion.

Why sarcasm baffles AIs thestack.com|By The Stack.com

The need applies both in order for AIs to accurately assess archive material or interpret existing datasets, and in the field of sentiment analysis, where a neural network or other model of AI seeks to interpret data based on publicly posted web material.

Attempts have been made to ring-fence sarcastic data by the use of hash-tags such as #not on Twitter, or by noting the authors who have posted material identified as sarcastic, in order to apply appropriate filters to their future work.

Some research has struggled to quantify sarcasm, since it may not be a discrete property in itself – i.e. indicative of a reverse position to the one that it seems to put forward – but rather part of a wider gamut of data-distorting humour, and may need to be identified as a subset of that in order to be found at all.

Most of the dozens of research projects which have addressed the problem of sarcasm as a hindrance to machine comprehension have studied the problem as it relates to the English and Chinese languages, though some work has also been done in identifying sarcasm in Italian-language tweets, whilst another project has explored Dutch sarcasm.

The new report details the ways that academia has approached the sarcasm problem over the last decade, but concludes that the solution to the problem is Not necessarily one of pattern recognition, but rather a more sophisticated matrix that has some ability to understand context.

Any computer which could reliably perform this kind of filtering could be argued to have developed a sense of humor.

Note: For AI machine to learn, it has to be confronted with genuine sarcastic people. And this species is a rarity

Taxonomy/classification of scientific Methods? An Exercise

Posted by: adonis49 on: December 22, 2020

An exercise: taxonomy of methods

Posted on: June 10, 2009

Article #14 in Human Factors

I am going to let you have a hand at classifying methods by providing a list of various methods that could be used in Industrial engineering, Human Factors, Ergonomics, and Industrial Psychology.

This first list of methods is organized in the sequence used to analyzing part of a system or a mission;

The second list is not necessarily randomized, though thrown in without much order; otherwise it will not be an excellent exercise.

First, let us agree that a method is a procedure or a set of step by step process that our forerunners of geniuses and scholars have tested, found it good, agreed on it on consensus basis and offered it for you to use for the benefit of progress and science.

Many of you will still try hard to find short cuts to anything, including methods, for the petty argument that the best criterion to discriminating among clever people is who waste time on methods and who are nerds.

Actually, the main reason I don’t try to teach many new methods in this course (Human Factors in Engineering) is that students might smack run into a real occupational stress, which they are Not immune of, especially that methods in human factors are complex and time consuming.

Here is this famous list of a few methods and you are to decide which ones are still in the conceptual phases and which have been “operationalized“.

The first list contains the following methods:

Operational analysis, activity analysis, critical incidents, function flow, decision/action, action/information analyses, functional allocation, task, fault tree, failure modes and effects analyses, timeline, link analyses, simulation, controlled experimentation, operational sequence analysis, and workload assessment.

The second list is constituted of methods that human factors are trained to utilize if need be such as:

Verbal protocol, neural network, utility theory, preference judgments, psycho-physical methods, operational research, prototyping, information theory, cost/benefit methods, various statistical modeling packages, and expert systems.

Just wait, let me resume.

There are those that are intrinsic to artificial intelligence methodology such as:

Fuzzy logic, robotics, discrimination nets, pattern matching, knowledge representation, frames, schemata, semantic network, relational databases, searching methods, zero-sum games theory, logical reasoning methods, probabilistic reasoning, learning methods, natural language understanding, image formation and acquisition, connectedness, cellular logic, problem solving techniques, means-end analysis, geometric reasoning system, algebraic reasoning system.

If your education is multidisciplinary you may catalog the above methods according to specialty disciplines such as:

Artificial intelligence, robotics, econometrics, marketing, human factors, industrial engineering, other engineering majors, psychology or mathematics.

The most logical grouping is along the purpose, input, process/procedure, and output/product of the method. Otherwise, it would be impossible to define and understand any method.

Methods could be used to analyze systems, provide heuristic data about human performance, make predictions, generate subjective data, discover the cause and effects of the main factors, or evaluate the human-machine performance of products or systems.

The inputs could be qualitative or quantitative such as declarative data, categorical, or numerical and generated from structured observations, records, interviews, questionnaires, computer generated or outputs from prior methods.

The outputs could be point data, behavioral trends, graphical in nature, context specific, generic, or reduction in alternatives.

The process could be a creative graphical or pictorial model, logical hierarchy or in network alternative, operational, empirical, informal, or systematic.

You may also group these methods according to their mathematical branches such as algebraic, probabilistic, or geometric.

You may collect them as to their deterministic, statistical sampling methods and probabilistic characters.

You may differentiate the methods as belonging to categorical, ordinal, discrete or continuous measurements.

You may wish to investigate the methods as parametric, non parametric, distribution free population or normally distributed.

You may separate them on their representation forms such as verbal, graphical, pictorial, or in table.

You may discriminate them on heuristic, observational, or experimental scientific values.

You may bundle these methods on qualitative or quantitative values.

You may as well separate them on their historical values or modern techniques based on newer technologies.

You may select them as to their state of the art methods such as ancient methods that new information and new paradigms have refuted their validity or recently developed.

You may define the methods as those digitally or analytically amenable for solving problems.

You may choose to draw several lists of those methods that are economically sounds, esoteric, or just plainly fuzzy sounding.

You may opt to differentiate these methods on requiring high level of mathematical reasoning that are out of your capability and those that can be comprehended through persistent efforts.

You could as well sort them according to which ones fit nicely into the courses that you have already taken, but failed to recollect that they were indeed methods worth acquiring for your career.

You may use any of these taxonomies to answer an optional exam question with no guarantees that you might get a substantial grade.

It would be interesting to collect statistics on how often these methods are being used, by whom, for what rational and by which line of business and by which universities.

It would be interesting to translate these methods into Arabic, Chinese, Japanese, Hindu, or Russian.

The priority is to teach AI programs (for super-intelligent machines) how to make Moral choices: values preferred by well-educated people with vast general knowledge

Posted by: adonis49 on: December 16, 2020

We are in trouble if artificial intelligence programs are unable to discriminate among moral choices

Posted on August 10, 2016

Artificial intelligence is getting smarter by leaps and bounds in this century. Research suggests that a computer AI could be as “smart” as a human being.

Nick Bostrom says, it will overtake us: “Machine intelligence is the last invention that humanity will ever need to make.”

A philosopher and technologist, Bostrom asks us to think hard about the world we’re building right now, driven by thinking machines.

Will our smart machines help to preserve humanity and our values?

Or will they acquire values of their own?

The talk of Nick Bostrom on TedX

I work with a bunch of mathematicians, philosophers and computer scientists, and we sit around and think about the future of machine intelligence, among other subjects. Some people think that some of these things are sort of science fiction, far out there, crazy.

I like to say let’s look at the modern human condition. This is the normal way for things to be. But if we think about it, human species are actually a recently arrived guests on this planet.

Think about if Earth was created one year ago, the human species, then, would be 10 minutes old. The industrial era started two seconds ago.

Another way to look at this is to think of world GDP over the last 10,000 years. I’ve actually taken the trouble to plot this for you in a graph. It looks like this. (Laughter) It’s a curious shape for a normal condition. I sure wouldn’t want to sit on it.

Let’s ask ourselves, what is the cause of this current anomaly? Some people would say it’s technology.

Now it’s true, technology has accumulated through human history, and right now, technology advances extremely rapidly — that is the proximate cause, that’s why we are currently so very productive.

But I like to think back further to the ultimate cause.

Patsy Z shared this link. TED. August 4 at 7:23pm ·

“ The fate of humanity may depend on what the super intelligence does, once it is created.”

Why we must teach artificial intelligence how to make moral choices?

Machine intelligence is the last invention that humanity will ever need to make.ted.com|By Nick Bostrom

Look at these two highly distinguished gentlemen: We have Kanzi — he’s mastered 200 lexical tokens, an incredible feat. And Ed Witten unleashed the second superstring revolution.

If we look under the hood, this is what we find: basically the same thing. One is a little larger, it maybe also has a few tricks in the exact way it’s wired. These invisible differences cannot be too complicated, however, because there have only been 250,000 generations since our last common ancestor.

We know that complicated mechanisms take a long time to evolve. So a bunch of relatively minor changes take us from Kanzi to Witten, from broken-off tree branches to intercontinental ballistic missiles.

This then seems pretty obvious that everything we’ve achieved, and everything we care about, depends crucially on some relatively minor changes that made the human mind.

And the corollary is that any further changes that could significantly change the substrate of thinking could have potentially enormous consequences.

Artificial intelligence used to be about putting commands in a box. You would have human programmers that would painstakingly handcraft knowledge items. You build up these expert systems, and they were kind of useful for some purposes, but they were very brittle, you couldn’t scale them.

(Expert systems were created to teach new generations the expertise of the older ones in handling complex systems in industrial systems and dangerous military systems)

Some of my colleagues think we’re on the verge of something that could cause a profound change in that substrate, and that is machine superintelligence.

Basically, you got out only what you put in. But since then, a paradigm shift has taken place in the field of artificial intelligence. (The newer generations are teaching these machine on many different intelligences that they don’t know much about)

Today, the action is really around machine learning. So rather than handcrafting knowledge representations and features, we create algorithms that learn, often from raw perceptual data.

(Meta data from various experiments barely having any standard procedures?)

Basically the same thing that the human infant does. The result is A.I. that is not limited to one domain — the same system can learn to translate between any pairs of languages, or learn to play any computer game on the Atari console.

A.I. is still nowhere near having the same powerful, cross-domain ability to learn and plan as a human being has. The cortex still has some algorithmic tricks that we don’t yet know how to match in machines.

The question is, how far are we from being able to match those tricks?

A couple of years ago, we did a survey of some of the world’s leading A.I. experts, to see what they think, and one of the questions we asked was, “By which year do you think there is a 50% probability that we will have achieved human-level machine intelligence?”

We defined human-level here as the ability to perform almost any job at least as well as an adult human, so real human-level, not just within some limited domain.

And the median answer was 2040 or 2050, depending on precisely which group of experts we asked. Now, it could happen much later, or sooner, the truth is nobody really knows.

What we do know is that the ultimate limit to information processing in a machine substrate lies far outside the limits in biological tissue. This comes down to physics.

A biological neuron fires at 200 hertz, 200 times a second. But even a present-day transistor operates at the Gigahertz. Neurons propagate slowly in axons, 100 meters per second, tops. But in computers, signals can travel at the speed of light.

There are also size limitations, like a human brain has to fit inside a cranium, but a computer can be the size of a warehouse or larger. So the potential for superintelligence lies dormant in matter, much like the power of the atom lay dormant throughout human history, patiently waiting there until 1945.

In this century, scientists may learn to awaken the power of artificial intelligence. And I think we might then see an intelligence explosion.

(Okay, why keep learning and acquiring general knowledge? Would politicians rely on these machines for their decisions?)

Most people, when they think about what is smart and what is dumb, I think have in mind a picture roughly like this. So at one end we have the village idiot, and then far over at the other side we have Ed Witten, or Albert Einstein, or whoever your favorite guru is.

But I think that from the point of view of artificial intelligence, the true picture is actually probably more like this: AI starts out at this point here, at zero intelligence, and then, after many years of really hard work, maybe eventually we get to mouse-level artificial intelligence, something that can navigate cluttered environments as well as a mouse can.

And then, after many more years of really hard work, lots of investment, maybe eventually we get to chimpanzee-level artificial intelligence.

And then, after even more years of really, really hard work, we get to village idiot artificial intelligence. And a few moments later, we are beyond Ed Witten. The train doesn’t stop at Humanville Station. It’s likely, rather, to swoosh right by.

This development has profound implications, particularly when it comes to questions of power.

For example, chimpanzees are strong — pound for pound, a chimpanzee is about twice as strong as a fit human male. And yet, the fate of Kanzi and his pals depends a lot more on what we humans do than on what the chimpanzees do themselves. (Even without any super-intelligence)

Once there is super-intelligence, the fate of humanity may depend on what the super-intelligence does. Think about it: Machine intelligence is the last invention that humanity will ever need to make. Machines will then be better at inventing than we are, and they’ll be doing so on digital timescales.

What this means is basically a telescoping of the future.

Think of all the crazy technologies that you could have imagined maybe humans could have developed in the fullness of time: cures for aging, space colonization, self-replicating nanobots or uploading of minds into computers, all kinds of science fiction-y stuff that’s nevertheless consistent with the laws of physics.

All of this superintelligence could develop, and possibly quite rapidly.

A superintelligence with such technological maturity would be extremely powerful, and at least in some scenarios, it would be able to get what it wants. We would then have a future that would be shaped by the preferences of this A.I.

A good question is: “what are those preferences?” Here it gets trickier.

To make any headway with this, we must first of all avoid anthropomorphizing. And this is ironic because every newspaper article about the future of A.I. has a picture of this: So I think what we need to do is to conceive of the issue more abstractly, not in terms of vivid Hollywood scenarios.

We need to think of intelligence as an optimization process (after it had learned?), a process that steers the future into a particular set of configurations. A superintelligence is a really strong optimization process. It’s extremely good at using available means to achieve a state in which its goal is realized.

This means that there is no necessary connection between being highly intelligent in this sense, and having an objective that we humans would find worthwhile or meaningful.

Suppose we give an A.I. the goal to make humans smile.

When the A.I. is weak, it performs useful or amusing actions that cause its user to smile. When the A.I. becomes superintelligent, it realizes that there is a more effective way to achieve this goal: take control of the world and stick electrodes into the facial muscles of humans to cause constant, beaming grins.

Another example, suppose we give A.I. the goal to solve a difficult mathematical problem. When the A.I. becomes superintelligent, it realizes that the most effective way to get the solution to this problem is by transforming the planet into a giant computer, so as to increase its thinking capacity.

And notice that this gives the A.I.s an instrumental reason to do things to us that we might not approve of.

Human beings in this model are threats, we could prevent the mathematical problem from being solved.

Perceivably things won’t go wrong in these particular ways; these are cartoon examples.

But the general point here is important: if you create a really powerful optimization process to maximize for objective x, you better make sure that your definition of x incorporates everything you care about.

This is a lesson that’s also taught in many a myth. King Midas wishes that everything he touches be turned into gold. He touches his daughter, she turns into gold. He touches his food, it turns into gold. This could become practically relevant, not just as a metaphor for greed, but as an illustration of what happens if you create a powerful optimization process and give it misconceived or poorly specified goals.

Now you might say, if a computer starts sticking electrodes into people’s faces, we’d just shut it off.

First, this is not necessarily so easy to do if we’ve grown dependent on the system — like, where is the off switch to the Internet?

Second, why haven’t the chimpanzees flicked the off switch to humanity, or the Neanderthals? They certainly had reasons. We have an off switch, for example, right here. (Choking)

The reason is that we are an intelligent adversary; we can anticipate threats and plan around them. But so could a superintelligent agent, and it would be much better at that than we are. The point is, we should not be confident that we have this under control here.

And we could try to make our job a little bit easier by putting the A.I. in a box, like a secure software environment, a virtual reality simulation from which it cannot escape.

But how confident can we be that the A.I. couldn’t find a bug? Given that merely human hackers find bugs all the time, I’d say, probably not very confident.

So we disconnect the ethernet cable to create an air gap, but again, like merely human hackers routinely transgress air gaps using social engineering.

Right now, as I speak, I’m sure there is some employee out there somewhere who has been talked into handing out her account details by somebody claiming to be from the I.T. department.

More creative scenarios are also possible, like if you’re the A.I., you can imagine wiggling electrodes around in your internal circuitry to create radio waves that you can use to communicate.

Or maybe you could pretend to malfunction, and then when the programmers open you up to see what went wrong with you, they look at the source code — Bam! — the manipulation can take place.

Or it could output the blueprint to a really nifty technology, and when we implement it, it has some surreptitious side effect that the A.I. had planned.

The point here is that we should not be confident in our ability to keep a superintelligent genie locked up in its bottle forever. Sooner or later, it will itself out.

I believe that the answer here is to figure out how to create superintelligent A.I. such that even if — when — it escapes, it is still safe because it is fundamentally on our side because it shares our values.

I see no way around this difficult problem.

I’m actually fairly optimistic that this problem can be solved. We wouldn’t have to write down a long list of everything we care about, or worse yet, spell it out in some computer language like C++ or Python, that would be a task beyond hopeless.

Instead, we would create an A.I. that uses its intelligence to learn what we value, and its motivation system is constructed in such a way that it is motivated to pursue our values or to perform actions that it predicts we would approve of. We would thus leverage its intelligence as much as possible to solve the problem of value-loading.

(If we let the AI emulate human emotions, we are all dead)

This can happen, and the outcome could be very good for humanity. But it doesn’t happen automatically.

The initial conditions for the intelligence explosion might need to be set up in just the right way if we are to have a controlled detonation.

The values that the A.I. has need to match ours, not just in the familiar context, like where we can easily check how the A.I. behaves, but also in all novel contexts that the A.I. might encounter in the indefinite future.

There are also some esoteric issues that would need to be solved, sorted out: the exact details of its decision theory, how to deal with logical uncertainty and so forth.

So the technical problems that need to be solved to make this work look quite difficult — not as difficult as making a superintelligent A.I., but fairly difficult. Here is the worry: Making superintelligent A.I. is a really hard challenge.

Making super-intelligent A.I. that is safe involves some additional challenge on top of that.

The risk is that if somebody figures out how to crack the first challenge without also having cracked the additional challenge of ensuring perfect safety.

So I think that we should work out a solution to the control problem in advance, so that we have it available by the time it is needed.

Now it might be that we cannot solve the entire control problem in advance because maybe some elements can only be put in place once you know the details of the architecture where it will be implemented.

But the more of the control problem that we solve in advance, the better the odds that the transition to the machine intelligence era will go well. (I opt that Experimentation on various alternative control systems should take as long as human is still alive)

This to me looks like a thing that is well worth doing and I can imagine that if things turn out okay, that people a million years from now look back at this century and it might well be that they say that the one thing we did that really mattered was to get this thing right.

Note: Nick Bostrom. Philosopher. He asks big questions: What should we do, as individuals and as a species, to optimize our long-term prospects? Will humanity’s technological advancements ultimately destroy us? Full bio

It is Not the Machine that is learning. Is human algorithms forcing everyone to adapt or die?

Posted by: adonis49 on: November 16, 2020

Which machine learning algorithm should I use? How many and which one is best?

Note: in the early 1990’s, I took graduate classes in Artificial Intelligence (AI) (The if…Then series of questions and answer of experts in their fields of work) and neural networks developed by psychologists.

The concepts are the same, though upgraded with new algorithms and automation.

I recall a book with a Table (like the Mendeleev table in chemistry) that contained the terms, mental processes, mathematical concepts behind the ideas that formed the AI trend…

There are several lists of methods, depending on the field of study you are more concerned with.

One list of methods is constituted of methods that human factors are trained to utilize if need be, such as:

Verbal protocol, neural network, utility theory, preference judgments, psycho-physical methods, operational research, prototyping, information theory, cost/benefit methods, various statistical modeling packages, and expert systems.

There are those that are intrinsic to artificial intelligence methodology such as:

Fuzzy logic, robotics, discrimination nets, pattern matching, knowledge representation, frames, schemata, semantic network, relational databases, searching methods, zero-sum games theory, logical reasoning methods, probabilistic reasoning, learning methods, natural language understanding, image formation and acquisition, connectedness, cellular logic, problem solving techniques, means-end analysis, geometric reasoning system, algebraic reasoning system.

Hui Li on Subconscious Musings posted on April 12, 2017 Advanced Analytics | Machine Learning

This resource is designed primarily for beginner to intermediate data scientists or analysts who are interested in identifying and applying machine learning algorithms to address the problems of their interest.

A typical question asked by a beginner, when facing a wide variety of machine learning algorithms, is “which algorithm should I use?”

The answer to the question varies depending on many factors, including:

- The size, quality, and nature of data.

- The available computational time.

- The urgency of the task.

- What you want to do with the data.

Even an experienced data scientist cannot tell which algorithm will perform the best before trying different algorithms.

We are not advocating a one and done approach, but we do hope to provide some guidance on which algorithms to try first depending on some clear factors.

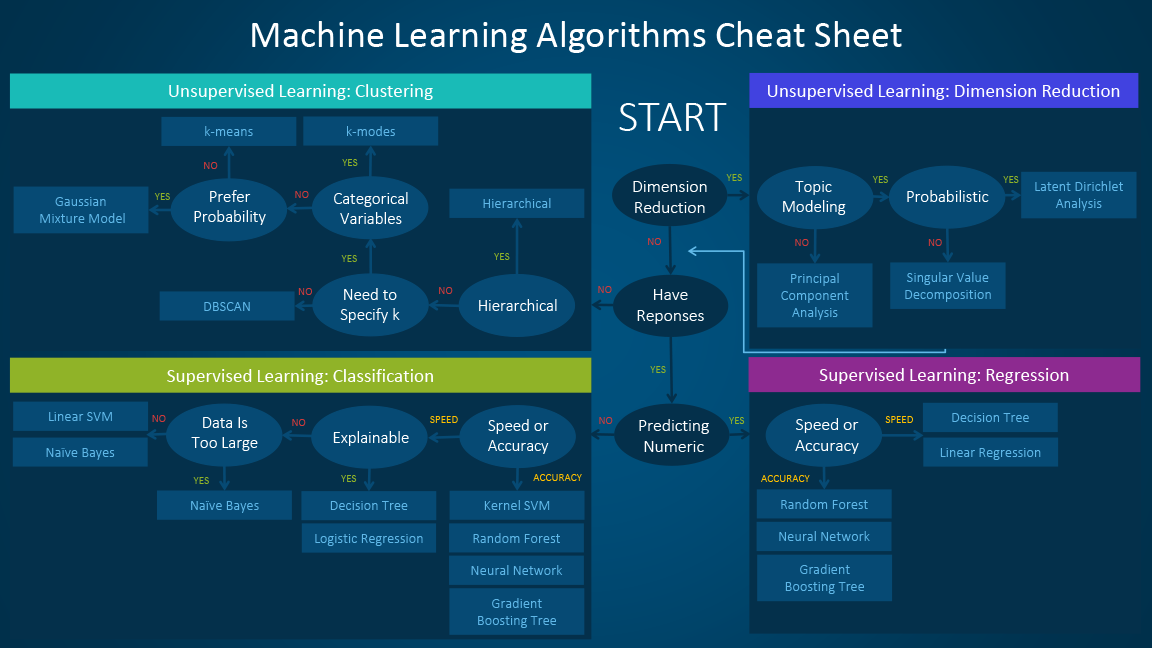

The machine learning algorithm cheat sheet

The machine learning algorithm cheat sheet helps you to choose from a variety of machine learning algorithms to find the appropriate algorithm for your specific problems.

This article walks you through the process of how to use the sheet.

Since the cheat sheet is designed for beginner data scientists and analysts, we will make some simplified assumptions when talking about the algorithms.

The algorithms recommended here result from compiled feedback and tips from several data scientists and machine learning experts and developers.

There are several issues on which we have not reached an agreement and for these issues we try to highlight the commonality and reconcile the difference.

Additional algorithms will be added in later as our library grows to encompass a more complete set of available methods.

How to use the cheat sheet

Read the path and algorithm labels on the chart as “If <path label> then use <algorithm>.” For example:

- If you want to perform dimension reduction then use principal component analysis.

- If you need a numeric prediction quickly, use decision trees or logistic regression.

- If you need a hierarchical result, use hierarchical clustering.

Sometimes more than one branch will apply, and other times none of them will be a perfect match.

It’s important to remember these paths are intended to be rule-of-thumb recommendations, so some of the recommendations are not exact.

Several data scientists I talked with said that the only sure way to find the very best algorithm is to try all of them.

(Is that a process to find an algorithm that matches your world view on an issue? Or an answer that satisfies your boss?)

Types of machine learning algorithms

This section provides an overview of the most popular types of machine learning. If you’re familiar with these categories and want to move on to discussing specific algorithms, you can skip this section and go to “When to use specific algorithms” below.

Supervised learning

Supervised learning algorithms make predictions based on a set of examples.

For example, historical sales can be used to estimate the future prices. With supervised learning, you have an input variable that consists of labeled training data and a desired output variable.

You use an algorithm to analyze the training data to learn the function that maps the input to the output. This inferred function maps new, unknown examples by generalizing from the training data to anticipate results in unseen situations.

- Classification: When the data are being used to predict a categorical variable, supervised learning is also called classification. This is the case when assigning a label or indicator, either dog or cat to an image. When there are only two labels, this is called binary classification. When there are more than two categories, the problems are called multi-class classification.

- Regression: When predicting continuous values, the problems become a regression problem.

- Forecasting: This is the process of making predictions about the future based on the past and present data. It is most commonly used to analyze trends. A common example might be estimation of the next year sales based on the sales of the current year and previous years.

Semi-supervised learning

The challenge with supervised learning is that labeling data can be expensive and time consuming. If labels are limited, you can use unlabeled examples to enhance supervised learning. Because the machine is not fully supervised in this case, we say the machine is semi-supervised. With semi-supervised learning, you use unlabeled examples with a small amount of labeled data to improve the learning accuracy.

Unsupervised learning

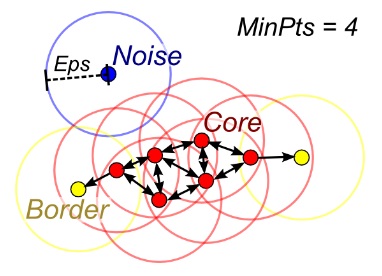

When performing unsupervised learning, the machine is presented with totally unlabeled data. It is asked to discover the intrinsic patterns that underlies the data, such as a clustering structure, a low-dimensional manifold, or a sparse tree and graph.

- Clustering: Grouping a set of data examples so that examples in one group (or one cluster) are more similar (according to some criteria) than those in other groups. This is often used to segment the whole dataset into several groups. Analysis can be performed in each group to help users to find intrinsic patterns.

- Dimension reduction: Reducing the number of variables under consideration. In many applications, the raw data have very high dimensional features and some features are redundant or irrelevant to the task. Reducing the dimensionality helps to find the true, latent relationship.

Reinforcement learning

Reinforcement learning analyzes and optimizes the behavior of an agent based on the feedback from the environment. Machines try different scenarios to discover which actions yield the greatest reward, rather than being told which actions to take. Trial-and-error and delayed reward distinguishes reinforcement learning from other techniques.

Considerations when choosing an algorithm

When choosing an algorithm, always take these aspects into account: accuracy, training time and ease of use. Many users put the accuracy first, while beginners tend to focus on algorithms they know best.

When presented with a dataset, the first thing to consider is how to obtain results, no matter what those results might look like. Beginners tend to choose algorithms that are easy to implement and can obtain results quickly. This works fine, as long as it is just the first step in the process. Once you obtain some results and become familiar with the data, you may spend more time using more sophisticated algorithms to strengthen your understanding of the data, hence further improving the results.

Even in this stage, the best algorithms might not be the methods that have achieved the highest reported accuracy, as an algorithm usually requires careful tuning and extensive training to obtain its best achievable performance.

When to use specific algorithms

Looking more closely at individual algorithms can help you understand what they provide and how they are used. These descriptions provide more details and give additional tips for when to use specific algorithms, in alignment with the cheat sheet.

Linear regression and Logistic regression

Linear regressionLogistic regression

Linear regression is an approach for modeling the relationship between a continuous dependent variable [Math Processing Error]y and one or more predictors [Math Processing Error]X. The relationship between [Math Processing Error]y and [Math Processing Error]X can be linearly modeled as [Math Processing Error]y=βTX+ϵ Given the training examples [Math Processing Error]{xi,yi}i=1N, the parameter vector [Math Processing Error]β can be learnt.

If the dependent variable is not continuous but categorical, linear regression can be transformed to logistic regression using a logit link function. Logistic regression is a simple, fast yet powerful classification algorithm.

Here we discuss the binary case where the dependent variable [Math Processing Error]y only takes binary values [Math Processing Error]{yi∈(−1,1)}i=1N (it which can be easily extended to multi-class classification problems).

In logistic regression we use a different hypothesis class to try to predict the probability that a given example belongs to the “1” class versus the probability that it belongs to the “-1” class. Specifically, we will try to learn a function of the form:[Math Processing Error]p(yi=1|xi)=σ(βTxi) and [Math Processing Error]p(yi=−1|xi)=1−σ(βTxi).

Here [Math Processing Error]σ(x)=11+exp(−x) is a sigmoid function. Given the training examples[Math Processing Error]{xi,yi}i=1N, the parameter vector [Math Processing Error]β can be learnt by maximizing the Pyongyang said it could call off the talks, slated for June 12, if the US continues to insist that it give up its nuclear weapons. North Korea called the military drills between South Korea and the US a “provocation,” and canceled a meeting planned for today with South Korea.of [Math Processing Error]β given the data set.Group By Linear RegressionLogistic Regression in SAS Visual Analytics

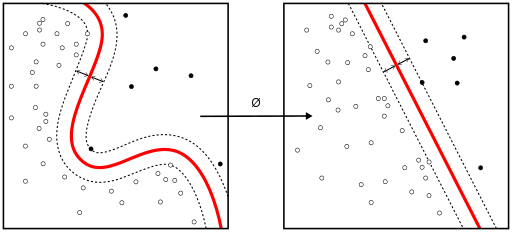

Linear SVM and kernel SVM

Kernel tricks are used to map a non-linearly separable functions into a higher dimension linearly separable function. A support vector machine (SVM) training algorithm finds the classifier represented by the normal vector [Math Processing Error]w and bias [Math Processing Error]b of the hyperplane. This hyperplane (boundary) separates different classes by as wide a margin as possible. The problem can be converted into a constrained optimization problem:

[Math Processing Error]minimizew||w||subject toyi(wTXi−b)≥1,i=1,…,n.

A support vector machine (SVM) training algorithm finds the classifier represented by the normal vector and bias of the hyperplane. This hyperplane (boundary) separates different classes by as wide a margin as possible. The problem can be converted into a constrained optimization problem:

When the classes are not linearly separable, a kernel trick can be used to map a non-linearly separable space into a higher dimension linearly separable space.

When most dependent variables are numeric, logistic regression and SVM should be the first try for classification. These models are easy to implement, their parameters easy to tune, and the performances are also pretty good. So these models are appropriate for beginners.

Trees and ensemble trees

Decision trees, random forest and gradient boosting are all algorithms based on decision trees.

There are many variants of decision trees, but they all do the same thing – subdivide the feature space into regions with mostly the same label. Decision trees are easy to understand and implement.

However, they tend to over fit data when we exhaust the branches and go very deep with the trees. Random Forrest and gradient boosting are two popular ways to use tree algorithms to achieve good accuracy as well as overcoming the over-fitting problem.

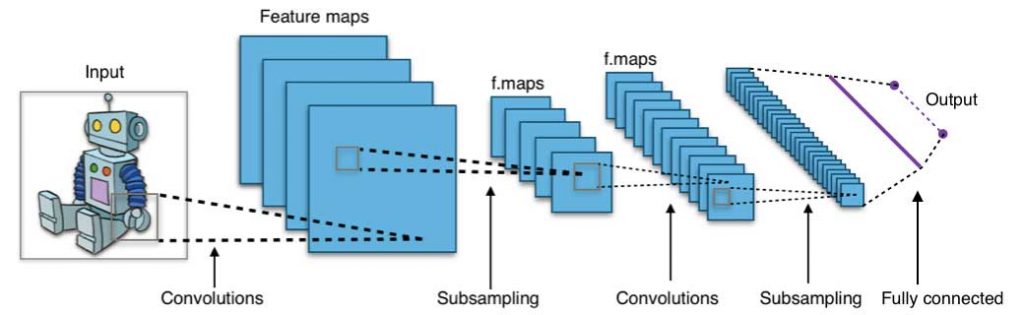

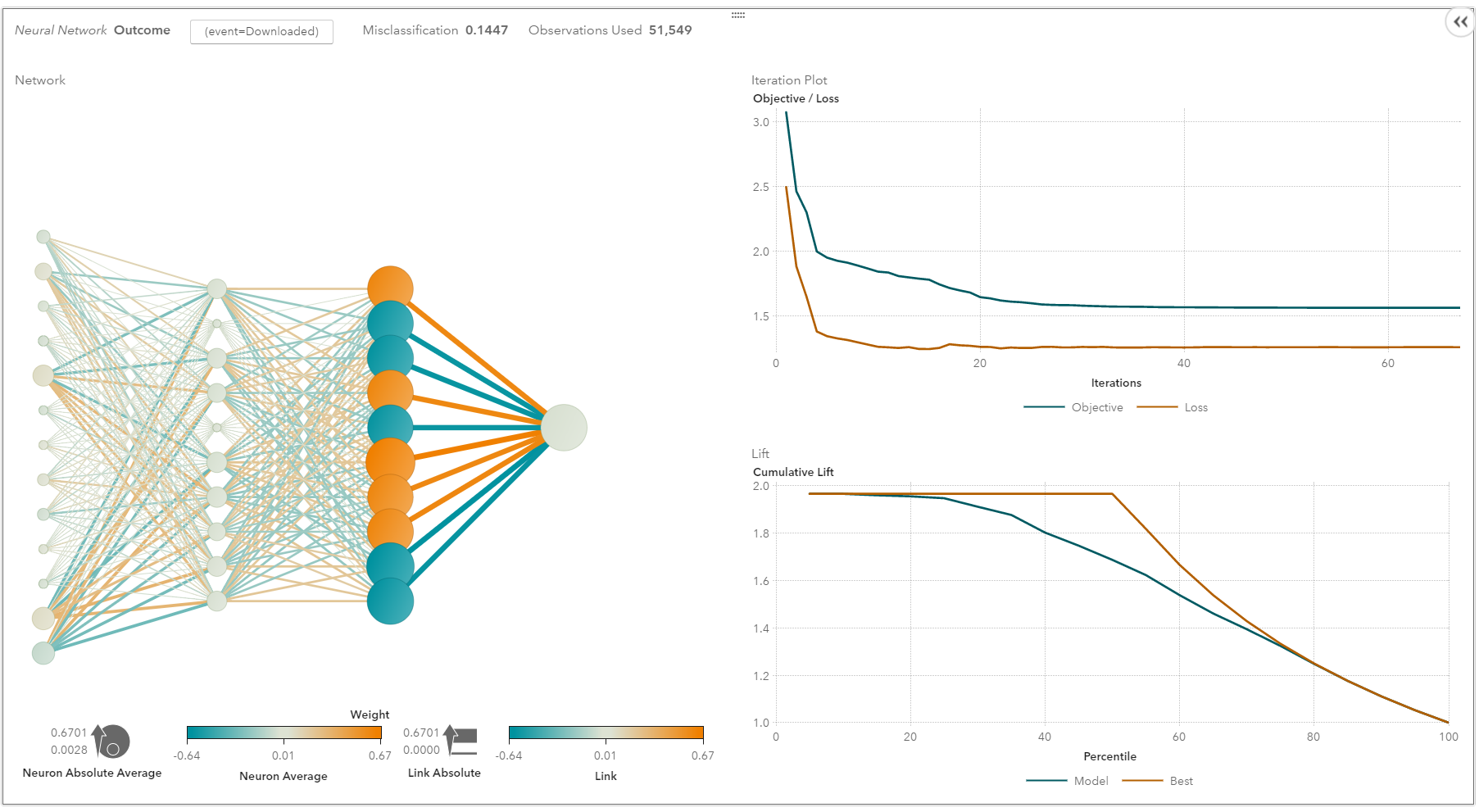

Neural networks and deep learning

Neural networks flourished in the mid-1980s due to their parallel and distributed processing ability.

Research in this field was impeded by the ineffectiveness of the back-propagation training algorithm that is widely used to optimize the parameters of neural networks. Support vector machines (SVM) and other simpler models, which can be easily trained by solving convex optimization problems, gradually replaced neural networks in machine learning.

In recent years, new and improved training techniques such as unsupervised pre-training and layer-wise greedy training have led to a resurgence of interest in neural networks.

Increasingly powerful computational capabilities, such as graphical processing unit (GPU) and massively parallel processing (MPP), have also spurred the revived adoption of neural networks. The resurgent research in neural networks has given rise to the invention of models with thousands of layers.

Shallow neural networks have evolved into deep learning neural networks.

Deep neural networks have been very successful for supervised learning. When used for speech and image recognition, deep learning performs as well as, or even better than, humans.

Applied to unsupervised learning tasks, such as feature extraction, deep learning also extracts features from raw images or speech with much less human intervention.

A neural network consists of three parts: input layer, hidden layers and output layer.

The training samples define the input and output layers. When the output layer is a categorical variable, then the neural network is a way to address classification problems. When the output layer is a continuous variable, then the network can be used to do regression.

When the output layer is the same as the input layer, the network can be used to extract intrinsic features.

The number of hidden layers defines the model complexity and modeling capacity.

Deep Learning: What it is and why it matters

k-means/k-modes, GMM (Gaussian mixture model) clustering

K Means ClusteringGaussian Mixture Model

Kmeans/k-modes, GMM clustering aims to partition n observations into k clusters. K-means define hard assignment: the samples are to be and only to be associated to one cluster. GMM, however define a soft assignment for each sample. Each sample has a probability to be associated with each cluster. Both algorithms are simple and fast enough for clustering when the number of clusters k is given.

DBSCAN

When the number of clusters k is not given, DBSCAN (density-based spatial clustering) can be used by connecting samples through density diffusion.

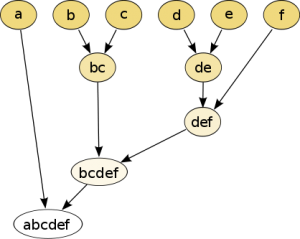

Hierarchical clustering

Hierarchical partitions can be visualized using a tree structure (a dendrogram). It does not need the number of clusters as an input and the partitions can be viewed at different levels of granularities (i.e., can refine/coarsen clusters) using different K.

PCA, SVD and LDA

We generally do not want to feed a large number of features directly into a machine learning algorithm since some features may be irrelevant or the “intrinsic” dimensionality may be smaller than the number of features. Principal component analysis (PCA), singular value decomposition (SVD), andlatent Dirichlet allocation (LDA) all can be used to perform dimension reduction.

PCA is an unsupervised clustering method which maps the original data space into a lower dimensional space while preserving as much information as possible. The PCA basically finds a subspace that most preserves the data variance, with the subspace defined by the dominant eigenvectors of the data’s covariance matrix.

The SVD is related to PCA in the sense that SVD of the centered data matrix (features versus samples) provides the dominant left singular vectors that define the same subspace as found by PCA. However, SVD is a more versatile technique as it can also do things that PCA may not do.

For example, the SVD of a user-versus-movie matrix is able to extract the user profiles and movie profiles which can be used in a recommendation system. In addition, SVD is also widely used as a topic modeling tool, known as latent semantic analysis, in natural language processing (NLP).

A related technique in NLP is latent Dirichlet allocation (LDA). LDA is probabilistic topic model and it decomposes documents into topics in a similar way as a Gaussian mixture model (GMM) decomposes continuous data into Gaussian densities. Differently from the GMM, an LDA models discrete data (words in documents) and it constrains that the topics are a priori distributed according to a Dirichlet distribution.

Conclusions

This is the work flow which is easy to follow. The takeaway messages when trying to solve a new problem are:

- Define the problem. What problems do you want to solve?

- Start simple. Be familiar with the data and the baseline results.

- Then try something more complicated.

- Dr. Hui Li is a Principal Staff Scientist of Data Science Technologies at SAS. Her current work focuses on Deep Learning, Cognitive Computing and SAS recommendation systems in SAS Viya. She received her PhD degree and Master’s degree in Electrical and Computer Engineering from Duke University.

- Before joining SAS, she worked at Duke University as a research scientist and at Signal Innovation Group, Inc. as a research engineer. Her research interests include machine learning for big, heterogeneous data, collaborative filtering recommendations, Bayesian statistical modeling and reinforcement learning.

Natural Language Processing (NLP)? Is it a language programming? To do what different?

Posted by: adonis49 on: January 21, 2019

What Is Natural Language Processing And What Is It Used For?

Terence Mills 731

The Little Black Book of Billionaire Secrets.

Terence Mills, CEO of AI.io and Moonshot is an AI pioneer and digital technology specialist. Connect with him about AI or mobile on LinkedIn

Artificial intelligence (AI) is changing the way we look at the world. AI “robots” are everywhere. (Mostly in Japan and China)

From our phones to devices like Amazon’s Alexa, we live in a world surrounded by machine learning.

Google, Netflix, data companies, video games and more, all use AI to comb through large amounts of data. The end result is insights and analysis that would otherwise either be impossible or take far too long.

It’s no surprise that businesses of all sizes are taking note of large companies’ success with AI and jumping on board. Not all AI is created equal in the business world, though. Some forms of artificial intelligence are more useful than others.

Today, I’m touching on something called natural language processing (NLP).

It’s a form of artificial intelligence that focuses on analyzing the human language to draw insights, create advertisements, help you text (yes, really) and more. (And what of body language?)

But Why Natural Language Processing?

NLP is an emerging technology that drives many forms of AI you’re used to seeing.

The reason I’ve chosen to focus on this technology instead of say, AI for math-based analysis, is the increasingly large application for NLP.

Think about it this way.

Every day, humans say thousands of words that other humans interpret to do countless things. At its core, it’s simple communication, but we all know words run much deeper than that. (That’s the function of slang in community)

There’s a context that we derive from everything someone says. Whether they imply something with their body language or in how often they mention something.

While NLP doesn’t focus on voice inflection, it does draw on contextual patterns. (Meaning: currently it doesn’t care about the emotions?)

This is where it gains its value (As if in communication people lay out the context first?).

Let’s use an example to show just how powerful NLP is when used in a practical situation. When you’re typing on an iPhone, like many of us do every day, you’ll see word suggestions based on what you type and what you’re currently typing. That’s natural language processing in action.

It’s such a little thing that most of us take for granted, and have been taking for granted for years, but that’s why NLP becomes so important. Now let’s translate that to the business world.

Some company is trying to decide how best to advertise to their users. They can use Google to find common search terms that their users type when searching for their product. (In a nutshell, that’s the most urgent usage of NLP?)

NLP then allows for a quick compilation of the data into terms obviously related to their brand and those that they might not expect. Capitalizing on the uncommon terms could give the company the ability to advertise in new ways.

So How Does NLP Work?

As mentioned above, natural language processing is a form of artificial intelligence that analyzes the human language. It takes many forms, but at its core, the technology helps machine understand, and even communicate with, human speech.

But understanding NLP isn’t the easiest thing. It’s a very advanced form of AI that’s only recently become viable. That means that not only are we still learning about NLP but also that it’s difficult to grasp.

I’ve decided to break down NLP in layman’s term. I might not touch on every technical definition, but what follows is the easiest way to understand how natural language processing works.

The first step in NLP depends on the application of the system. Voice-based systems like Alexa or Google Assistant need to translate your words into text. That’s done (usually) using the Hidden Markov Models system (HMM).

The HMM uses math models to determine what you’ve said and translate that into text usable by the NLP system. Put in the simplest way, the HMM listens to 10- to 20-millisecond clips of your speech and looks for phonemes (the smallest unit of speech) to compare with pre-recorded speech.

Next is the actual understanding of the language and context. Each NLP system uses slightly different techniques, but on the whole, they’re fairly similar. The systems try to break each word down into its part of speech (noun, verb, etc.).

This happens through a series of coded grammar rules that rely on algorithms that incorporate statistical machine learning to help determine the context of what you said.

If we’re not talking about speech-to-text NLP, the system just skips the first step and moves directly into analyzing the words using the algorithms and grammar rules.

The end result is the ability to categorize what is said in many different ways. Depending on the underlying focus of the NLP software, the results get used in different ways.

For instance, an SEO application could use the decoded text to pull keywords associated with a certain product.

Semantic Analysis

When explaining NLP, it’s also important to break down semantic analysis. It’s closely related to NLP and one could even argue that semantic analysis helps form the backbone of natural language processing.

Semantic analysis is how NLP AI interprets human sentences logically. When the HMM method breaks sentences down into their basic structure, semantic analysis helps the process add content.

For instance, if an NLP program looks at the word “dummy” it needs context to determine if the text refers to calling someone a “dummy” or if it’s referring to something like a car crash “dummy.”

If the HMM method breaks down text and NLP allows for human-to-computer communication, then semantic analysis allows everything to make sense contextually.

Without semantic analysts, we wouldn’t have nearly the level of AI that we enjoy. As the process develops further, we can only expect NLP to benefit.

NLP And More

As NLP develops we can expect to see even better human to AI interaction. Devices like Google’s Assistant and Amazon’s Alexa, which are now making their way into our homes and even cars, are showing that AI is here to stay.

The next few years should see AI technology increase even more, with the global AI market expected to push $60 billion by 2025 (registration required). Needless to say, you should keep an eye on AI.

Cost of Lemonade stand, Artificial Intelligence program, and are you doing it?

Posted by: adonis49 on: July 3, 2018

Cost of Lemonade stand, Artificial Intelligence program,

Three decades ago, I audited an Artificial Intelligence programming course. The professor never programmed one, but it was the rage.

The prof. was candid and said: “The only way to learn these kinds of programming is by doing it. The engine was there to logically arrange the “If this ,then do that” questions in order to answer a technical problem. Nothing to it.

I failed even to try a very simple program: I had a heavy workload and didn’t have the passion for any engineering project at the time.

I cannot say that I know Artificial Intelligence, regardless of the many fancy technical and theoretical books I read on the subject

Studying entrepreneurship without doing it is like studying the appreciation of music without listening to it.

(Actually, I did study so many subject matters, and the ones supposed to be of the practical ones, but failed to do or practice any skills. My intention was to stay away from theoretical subjects and ended up sticking to the theories. For example, I enrolled in Industrial Engineering, thinking it was mostly of the hand-on discipline. Wrong: it was mostly theoretical simply because the university lacked labs. and technical staff and machineries)

The cost of setting up a lemonade stand (or whatever metaphorical equivalent you dream up) is almost 100% internal. Until you confront the fear and discomfort of being in the world and saying, “here, I made this,” it’s impossible to understand anything at all about what it means to be a entrepreneur. Or an artist.

Never enough

There’s never enough time to be as patient as we need to be.

Not enough slack to focus on the long-term, too much urgency in the now to take the time and to plan ahead.

That urgent sign post just ahead demands all of our intention (and attention), and we decide to invest in, “down the road,” down the road.

It’s not only more urgent, but it’s easier to run to the urgent meeting than it is to sit down with a colleague and figure out the truth of what matters and the why of what’s before us.

And there’s never enough money to easily make the investments that matter.

Not enough surplus in the budget to take care of those that need our help, too much on our plate to be generous right now.

The short term bills make it easy to ignore the long-term opportunities.

Of course, the organizations that get around the universal and insurmountable problems of not enough time and not enough money are able to create innovations, find resources to be generous and prepare for a tomorrow that’s better than today. It’s not easy, not at all, but probably (okay, certainly) worth it.

We’re going to spend our entire future living in tomorrow—investing now, when it’s difficult, is the single best moment.

Posted by Seth Godin on March 11, 2013

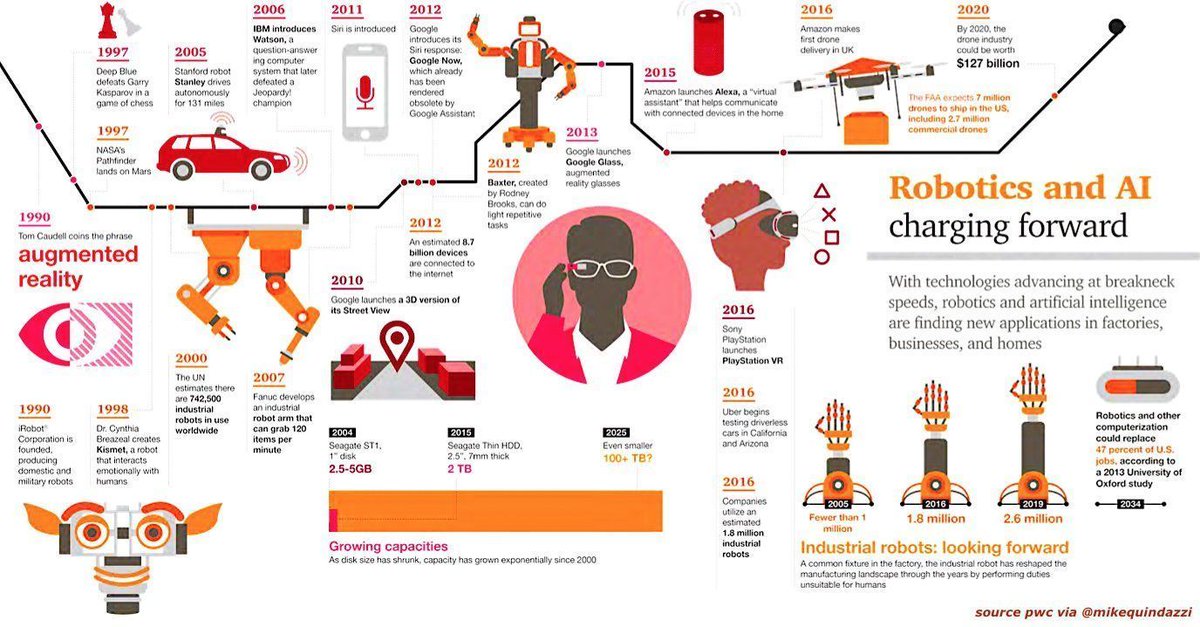

How fast are Robotics and Artificial Intelligence progressing?

Posted by: adonis49 on: October 17, 2017

How fast are Robotics and Artificial Intelligence progressing?

Close

Google launched an initiative to improve how users work with artificial intelligence

- The research initiative will involve collaborations with people in multiple Google product groups, as well as professors from Harvard and MIT.

- More informative explanations of recommendations could result from the research over time.

Alphabet on Monday said it has kicked off a new research initiative aimed at improving human interaction with artificial intelligence systems.

The People + AI Research (PAIR) program currently encompasses a dozen people who will collaborate with Googlers in various product groups — as well as outsiders like Harvard University professor Brendan Meade and Massachusetts Institute of Technology professor Hal Abelson.

The research could eventually lead to refinements in the interfaces of the smarter components of some of the world’s most popular apps. And Google’s efforts here could inspire other companies to adjust their software, too.

“One of the things we’re going to be looking into is this notion of explanation — what might be a useful on-time, on-demand explanation about why a recommendation system did something it did,” Google Brain senior staff research scientist Fernanda Viegas told CNBC in an interview.

The PAIR program takes inspiration from the concept of design thinking, which highly prioritizes the needs of people who will use the products being developed.

While end users — such as YouTube’s 1.5 billion monthly users — can be the target of that, the research is also meant to improve the experience of working with AI systems for AI researchers, software engineers and domain experts as well, Google Brain senior staff research scientist Martin Wattenberg told CNBC.

The new initiative fits in well with Google’s increasing focus on AI.

Google CEO Sundar Pichai has repeatedly said the world is transitioning from being mobile-first to AI-first, and the company has been taking many steps around that thesis.

Recently, for example, Google formed a venture capital group to invest in AI start-ups.

Meanwhile Amazon, Apple, Facebook and Microsoft have been active in AI in the past few years as well.

The company implemented a redesign for several of its apps in 2011 and in more recent years has been sprucing up many of its properties with its material design principles.

in 2016 John Maeda, then the design partner at Kleiner Perkins Caufield & Byers, pointed out in his annual report on design in technology that Google had been perceived as improving the most in design.

What is new is that Googlers are trying to figure out how to improve design specifically for AI components. And that’s important because AI is used in a whole lot of places around Google apps, even if you might not always realize it.

Video recommendations in YouTube, translations in Google Translate, article suggestions in the Google mobile app and even Google search results are all enhanced with AI.

Note: with no specific examples to understand what Justin is talking about, consider this article as free propaganda to Google